DDR, GDDR, and HBM Memory Speeds and Bandwidth

The performance of modern computers is significantly influenced by the speed and type of memory used. DDR, GDDR, and HBM memories are optimized for different purposes, whether it's main system memory for CPUs, memory for graphics cards, or tasks requiring high bandwidth computations. In this post, I compare the speeds and key parameters of these memory types.

The performance of computer hardware standards is often advertised using theoretical maximum values measured under ideal laboratory conditions (in practice, transfer rates can be limited by device controllers, temperature, or other bottlenecks.). These figures don't necessarily reflect real-world usage speeds, but they are excellent for comparison purposes as they clearly show the differences between technological generations.

In the tables below, I provide the theoretical maximum value for each standard, rounded for readability and comparison, and always shown in bytes (specifically MB/s) where applicable. I wrote about computer data transfer and storage standards, units of measurement, speeds, and their theoretical foundations here.

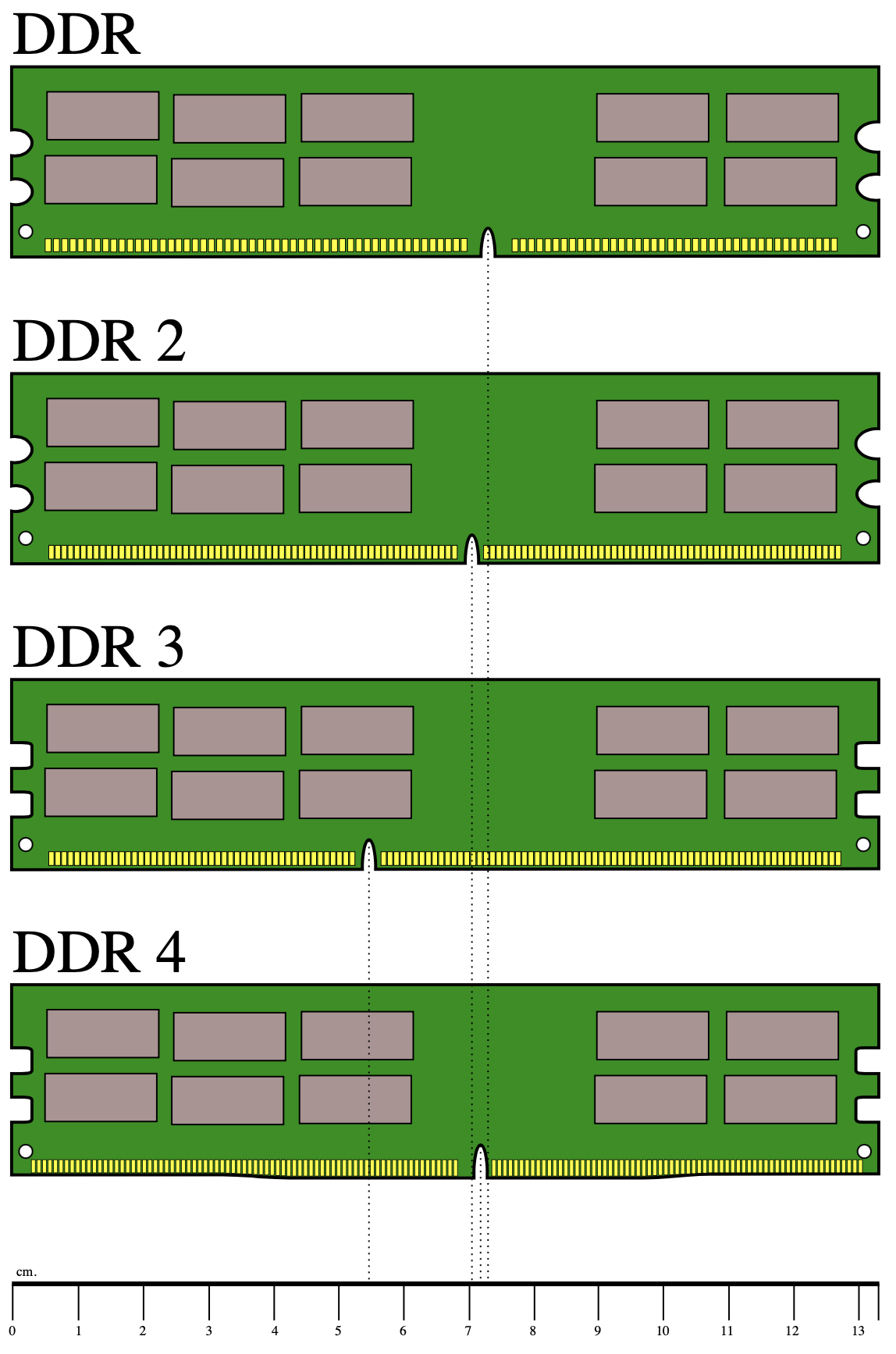

DDR SDRAM (System Memory)

Computer memory (RAM - Random Access Memory) serves as the computer's temporary, fast-access data storage. Modern RAM modules use DDR (Double Data Rate) technology, meaning data transfer occurs on both the rising and falling edges of the clock signal, effectively doubling the data transfer rate compared to the original SDRAM.

The original SDRAM (sometimes referred to as SD or SDR RAM to distinguish it from DDR) operated at clock speeds of 66-133 MHz. Since it wasn't "double data rate," its effective data transfer rate (in transfers per second) matched its clock speed (in cycles per second).

MT/s (MegaTransfers per second) indicates how many million data transfer operations the memory can perform per second. This is significant for DDR (Double Data Rate) memory because two data transfers occur per clock cycle – one on the rising edge and one on the falling edge of the clock signal.

Let's take DDR2-400 memory as an example:

- Its base clock speed is 200 MHz.

- Since it uses DDR technology (double data rate), the actual transfer rate is double the clock speed: 400 MT/s.

To visualize this better:

- A 200 MHz clock speed means 200 million clock cycles per second.

- In each cycle, 2 data transfers happen (rising and falling edge).

- Therefore, the total transfers are: 200 million cycles/sec × 2 transfers/cycle = 400 million transfers per second (400 MT/s).

This differs from bandwidth (MB/s), which measures the actual amount of data moved. Bandwidth is calculated by multiplying the MT/s value by the memory bus width (typically 8 bytes for a standard 64-bit DDR DIMM): 400 MT/s × 8 bytes/transfer = 3200 MB/s.

| Version | Transfer Rate (MT/s) | Module Name | Release Year | Clock Speed (MHz) | Bandwidth (MB/s)* |

|---|---|---|---|---|---|

| DDR | 200-400 | PC-1600 - PC-3200 | 1998 | 100-200 | 1,600 - 3,200 MB/s |

| DDR2 | 400-1066 | PC2-3200 - PC2-8500 | 2003 | 200-533 | 3,200 - 8,533 MB/s |

| DDR3 | 800-2133 | PC3-6400 - PC3-17000 | 2007 | 400-1066 | 6,400 - 17,066 MB/s |

| DDR4 | 1600-3200+ | PC4-12800 - PC4-25600+ | 2014 | 800-1600+ | 12,800 - 25,600+ MB/s |

| DDR5 | 4800-8000+ | PC5-38400 - PC5-64000+ | 2020 | 2400-4000+ | 38,400 - 64,000+ MB/s |

*Bandwidth calculated for a standard 64-bit (8 Byte) wide memory interface (single DIMM). Dual-channel or quad-channel configurations multiply this bandwidth.

Manufacturers market memory modules using different notations, such as version number (DDR4), transfer rate (3200 MT/s), or module name (PC4-25600). This can be confusing, but the table above helps identify equivalent modules. For example, DDR4-3200 and PC4-25600 refer to the same memory speed specification.

DDR ECC Memory

ECC (Error-Correcting Code) memory is a special type of memory primarily used in servers, workstations, and other systems requiring high reliability. DDR ECC memory differs from standard non-ECC DDR memory in its error-correction capabilities.

- Error Correction: ECC memory can automatically detect and correct single-bit errors and detect multi-bit errors. This significantly reduces the risk of data corruption and system crashes.

- Extra Bits: An ECC memory module typically uses extra bits per data word (e.g., 72 bits for 64 bits of data) to store the error-checking codes, enabling error correction.

- Compatibility: Not all motherboards support ECC memory. Most server and workstation motherboards are compatible, but typical consumer desktop motherboards are not. The CPU must also support ECC.

- Speed and Latency: ECC memory might be slightly slower or have higher latency than comparable non-ECC DDR memory due to the overhead of the error-checking process.

- DDR Generations: ECC technology is available for all modern DDR versions (DDR, DDR2, DDR3, DDR4, DDR5) and is commonly used in servers to enhance system stability.

ECC memory is typically employed in critical systems where data integrity and continuous operation are essential, such as financial services, healthcare systems, scientific research, and high-performance computing environments.

GDDR (Graphics Memory)

GDDR Memory: GDDR (Graphics Double Data Rate) memory is specifically designed for graphics cards (GPUs). It generally features higher clock speeds and transfer rates (GT/s) compared to standard DDR system memory, optimized for the high-bandwidth needs of rendering graphics and parallel processing. GDDR memory chips typically have a wider interface (e.g., 32-bit per chip) compared to standard DDR chips, and multiple chips are used on a graphics card for a very wide overall memory bus (e.g., 128-bit, 256-bit, 384-bit).

GT/s (GigaTransfers per second) indicates the number of data transfer operations per second, similar to MT/s for DDR memory, but scaled by 1000 (Giga vs. Mega). It represents the raw transfer speed, not the final bandwidth in Bytes/second.

| Version | Release Year | Clock Speed (MHz) | Transfer Rate (GT/s) | Typical Bandwidth (GB/s)** |

|---|---|---|---|---|

| GDDR2 | 2002 | 400–500 | 0.8–1.0 | ~12.8 – 16 GB/s |

| GDDR3 | 2004 | 800–1000 | 1.6–2.0 | ~51.2 – 80 GB/s |

| GDDR4 | 2006 | ~900–1150 | 1.8–2.3 | ~86 – 110 GB/s |

| GDDR5 | 2008 | ~1250–2000 | 5.0–8.0 | ~160 – 384 GB/s |

| GDDR5X | 2016 | ~1250–1750 | 10.0–14.0 | ~320 – 540 GB/s |

| GDDR6 | 2018 | ~1750–2500 | 14.0–20.0 | ~448 – 960 GB/s |

| GDDR6X | 2020 | ~1188–1438 | 19.0–23.0 | ~760 – 1104 GB/s |

**Bandwidth values are illustrative examples for typical high-end graphics cards of the era, calculated as (Transfer Rate * Bus Width / 8). Actual bandwidth depends heavily on the specific GPU's memory configuration (bus width). Clock speed refers to the effective data clock (half the transfer rate for non-PAM4 GDDR).

HBM (High Bandwidth Memory)

HBM Memory: HBM (High Bandwidth Memory) utilizes a fundamentally different approach by stacking memory dies vertically and connecting them to the processor (often a GPU or specialized accelerator) via an extremely wide interface (e.g., 1024-bit or wider per stack) on an interposer. This allows for enormous data transfer rates at lower clock speeds and lower power consumption compared to GDDR achieving similar bandwidth. HBM3E, for instance, can exceed 1 TB/s of bandwidth per stack. HBM is primarily used in high-performance computing (HPC), artificial intelligence (AI) accelerators, and high-end graphics cards where maximum memory bandwidth is critical.

| Version | Release Year | Bandwidth per Stack (GB/s) |

| HBM | 2013 | ~128 GB/s |

| HBM2 | 2016 | ~256 - 307 GB/s |

| HBM2E | 2018 | ~307 - 460 GB/s |

| HBM3 | 2022 | ~819 GB/s |

| HBM3E | 2023 | ~1,200+ GB/s |

| HBM4 (Projected) | ~2026 | ~1,500 - 2,000+ GB/s |

Summary Comparison

- DDR SDRAM: Standard system RAM used with CPUs. Offers a balance of speed, capacity, and cost. Bandwidth increases with generations (DDR3, DDR4, DDR5) and by using multiple channels (dual, quad). ECC variants provide reliability for servers/workstations.

- GDDR SDRAM: Optimized for graphics cards (GPUs). Prioritizes very high bandwidth through faster clock speeds/transfer rates and wide memory buses on the graphics card. Essential for gaming and GPU-accelerated tasks. Generally higher cost and power consumption than DDR for equivalent capacity.

- HBM: Designed for maximum bandwidth density and power efficiency. Uses stacked memory dies and extremely wide interfaces. Found in high-end GPUs, AI accelerators, and HPC systems where data throughput is paramount. Highest cost and typically lower capacities compared to DDR/GDDR.