Nvidia Graphics Cards Suitable for Running AI

Many people still associate graphics cards primarily with gaming, yet GPUs are capable of much more. Due to their architecture, they are excellently suited for parallel computations, which is essential for training and running deep learning models. Consider this: a modern LLM has billions of parameters, and all these parameters need to be managed simultaneously. This type of parallel processing is the true strength of GPUs, whereas traditional CPUs (central processing units) lag behind in this regard.

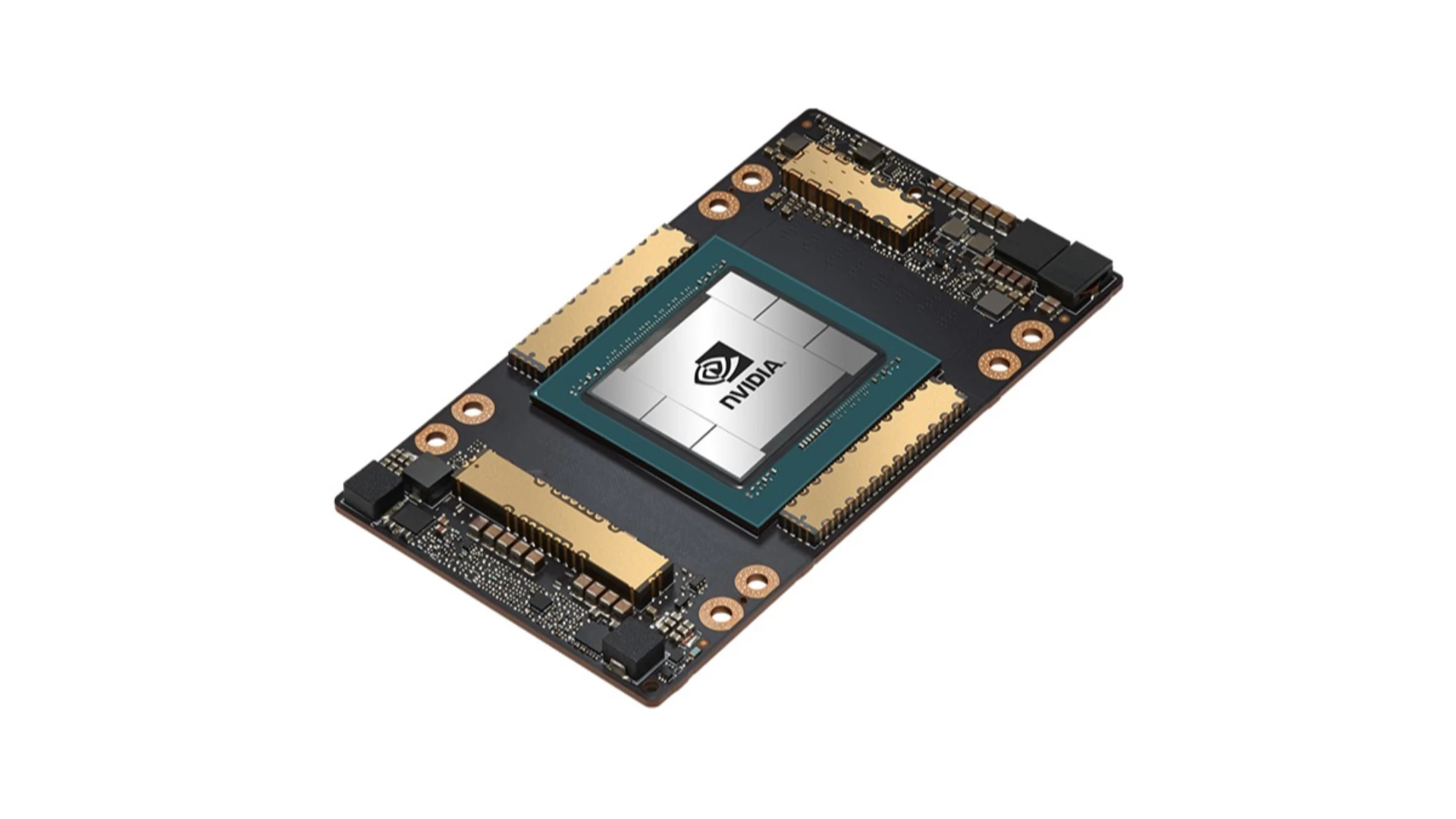

When it comes to AI and GPUs, the name Nvidia is almost synonymous. There are several reasons for this, but the most important are the CUDA platform and Tensor Cores.

CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by Nvidia. It allows GPUs to be used for general-purpose computing (GPGPU), not just graphics. Essentially, CUDA acts as a "bridge" between software and GPU hardware, making it easier for developers to harness the computational power of GPUs. For me, the biggest advantage of CUDA is that it provides a relatively unified and well-documented environment, significantly simplifying AI development. This is why it became the industry standard.

Tensor Cores are specialized units within Nvidia GPUs designed specifically to accelerate the matrix operations used in deep learning. These operations form the foundation of deep learning networks, and Tensor Cores significantly increase computational speed and efficiency. Newer generations of Tensor Cores offer greater performance and precision.

Number Formats in AI: Why Are They Important?

Artificial intelligence, especially deep learning, requires vast amounts of computation. The number formats used during these calculations (i.e., how numbers are stored and handled by the computer) directly influence:

- Speed: Lower precision formats (fewer bits) enable faster computations.

- Memory Requirements: Fewer bits require less memory, which is critical for loading and running large models.

- Power Consumption: Processing fewer bits generally consumes less energy.

- Accuracy: Higher precision formats (more bits) yield more accurate results, but this can come at the cost of speed, memory, and power.

Hardware support for these number formats is critically important for GPUs (and other AI accelerators) because it determines the fundamental efficiency of the computations.

More information about commonly used number formats in AI and the importance of hardware support.

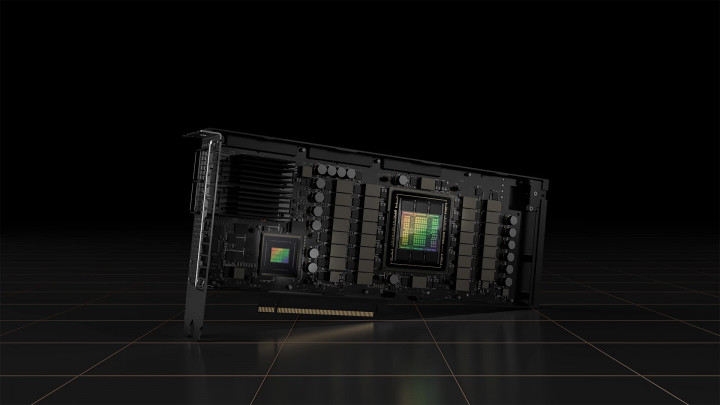

Nvidia Architectures in Chronological Order for AI Execution

Tesla (2006)

The first CUDA-capable architecture, which launched the GPGPU revolution, although it did not yet feature Tensor Cores.

Not recommended for AI execution, perhaps only for experimentation if acquired very cheaply. No dedicated AI acceleration. Limited CUDA support, very old drivers.

Typical models: GeForce 8, 9, 200, 300 series

Fermi (2010)

Not recommended for AI execution. Few CUDA cores, low computational performance. Suitable only for very simple models, if at all. Likely not worth the time and effort.

Typical models: GeForce 400, 500 series

Kepler (2012)

Focused on improving efficiency, introduced dynamic parallelism. Better CUDA support, but still insufficient for modern AI. Could be a low-budget entry point, but with compromises. Important to check driver support!

Typical professional models: Tesla K10, K20, K20X, K40 (12 GB), K80, Quadro K2000, K4000, K5000, K6000, etc. Consumer: GeForce 600, 700 series

Maxwell (2014)

The main goal was a significant increase in energy efficiency. Improved power efficiency, better CUDA performance. Can be used for smaller models at lower resolutions.

Typical professional models: Tesla M4, M40, M60, Quadro M2000, M4000, M5000, M6000, etc. Consumer: GeForce 750Ti, 900 series, GTX Titan X

Pascal (2016)

The introduction of NVLink (faster GPU-GPU communication) and FP16 (half-precision floating-point) support was an important step towards AI. This marked a more significant step forward for AI. Suitable for running medium-sized models. The 1080 Ti is still a strong card today. The GTX 1060 6GB is the entry-level, while the 1070, 1080, and 1080 Ti are suitable for more serious tasks.

Typical professional models: Tesla P4 (8GB), P40 (24GB), P100 (16GB), Quadro P2000, P4000, P5000, P6000, GP100, etc. Consumer: GeForce 10 series (GTX 1060, 1070, 1080, 1080 Ti, Titan Xp)

Volta (2017)

This is where Tensor Cores first appeared, giving a huge boost to deep learning performance. From the perspective of running LLMs, this can be considered a major milestone. The professional cards are expensive but offer excellent AI performance for smaller models.

Tesla V100 (16/32GB, HBM2), Quadro GV100, Titan V

Turing (2018)

Alongside Ray Tracing (RT) cores, INT8 (8-bit integer) support was introduced, aimed at accelerating inference. Excellent. Features Tensor Cores and Ray Tracing (RT) cores. Suitable for more serious AI projects (with sufficient VRAM).

Typical professional models: Tesla T4 (16GB, GDDR6), T10, T40 (24GB, GDDR6), Quadro RTX 4000, 5000, 6000, 8000. Consumer: GeForce RTX 20 series (RTX 2060, 2070, 2080, 2080 Ti), Titan RTX

Ampere (2020)

Second-generation RT cores, third-generation Tensor Cores, and the introduction of the TF32 (TensorFloat-32) format. Suitable for most AI tasks.

Typical professional models: A100 (40/80GB, HBM2e), A40 (48GB, GDDR6), A30, A16, A10, RTX A4000, A4500, A5000, A5500, A6000, etc. Consumer: GeForce RTX 30 series (RTX 3060, RTX 3070, RTX 3080, RTX 3090 (24GB, GDDR6x))

Hopper (2022)

Fourth-generation Tensor Cores, Transformer Engine (specifically optimized for LLMs), and FP8 support. Designed for data centers. Extraordinary performance at an extreme price.

Typical professional models: H100 (80GB HBM3), H200 (141GB, HBM3e)

Ada Lovelace (2022)

Fourth-generation Tensor Cores, DLSS 3, and optimized energy efficiency.

Typical professional models: RTX 6000 Ada Generation (48GB, GDDR6 ECC), L4, L40, L40S, etc. Consumer: GeForce RTX 40 series (RTX 4060, RTX 4070, RTX 4080, RTX 4090 (24GB, GDDR6X))

Blackwell (2024)

The latest generation, promising even greater performance, new Tensor Cores, and enhanced NVLink. Hardware support for FP4.

Typical professional models: B100, B200, GB200. Consumer: GeForce RTX 50 series (Expected: RTX 5060, RTX 5070, RTX 5080, RTX 5090 (potentially 32GB GDDR7))

Consumer vs. Professional GPUs

Consumer cards, like the GeForce RTX series, are primarily designed for gamers and content creators. They generally offer a better price-to-performance ratio in terms of raw computational power. This means you can get more compute capacity for less money, which can be attractive for hobbyist AI users or smaller projects.

Professional cards, on the other hand, are specifically built for researchers, data scientists, and enterprise AI developers. They are more expensive but offer several advantages that can justify the higher price:

- Larger VRAM Capacity: This is crucial for running and training large models, such as LLMs. Professional cards often come with significantly more VRAM than their consumer counterparts.

- ECC Memory: ECC (Error Correction Code) memory can detect and correct memory errors, increasing reliability, especially during long-running, high-load computations.

- Certified Drivers: Professional cards come with drivers that are specifically optimized and certified for professional applications (e.g., CAD, simulations).

- Longer Product Support: Professional cards typically receive support and updates for a longer period, which is important in enterprise environments.

- Specialized Features: These include NVLink (faster GPU-GPU communication), virtualization support, and better cooling solutions.