LLM Model Size, Memory Requirements, and Quantization

Large Language Models (LLMs), such as GPT-3, LLaMA, or PaLM, are neural networks of enormous size. Their size is typically characterized by the number of parameters (e.g., 7b, 14b, 72b, meaning 7 billion, 14 billion, 72 billion parameters). A parameter is essentially a weight or bias value within the network. These parameters are learned during training and collectively represent the model's "knowledge," determining how it processes information and generates outputs. Modern LLMs possess billions, sometimes even hundreds of billions, of parameters.

Hundreds of billions of parameters translate into substantial memory requirements:

- Storage: The model's parameters must be stored on persistent storage, like a hard drive or SSD.

- Loading: To run the model (perform inference), the parameters need to be loaded into the memory of the GPU (or other accelerator).

- Computation: During model execution, the GPU needs constant access to these parameters to perform calculations.

Example:

Let's assume a model has 175 billion parameters, and each parameter is stored in FP32 (32-bit floating-point) format.

- One FP32 number occupies 4 bytes (32 bits / 8 bits per byte).

- 175 billion parameters * 4 bytes/parameter = 700 billion bytes = 700 GB.

Therefore, just storing the model parameters requires 700 GB of space! Loading and running the model requires at least this much VRAM (Video RAM) on the GPU. This is why high-end GPUs with large amounts of VRAM (like NVIDIA A100, H100) are necessary for running large-scale LLMs. If, instead of 4 bytes, each parameter occupied only 1 byte (as with the INT8 format), the memory requirement in gigabytes would roughly equal the number of parameters in billions. For example, a 175B parameter model using INT8 would require approximately 175 GB of VRAM.

Quantization: Reducing Memory Requirements

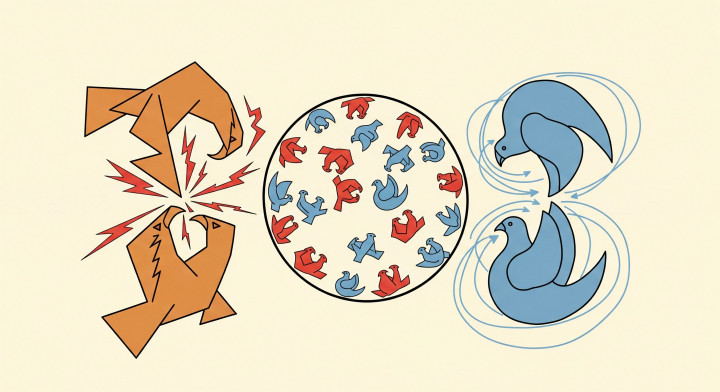

Quantization is a technique aimed at reducing the model's size and memory footprint, usually by sacrificing an acceptable amount of precision. During quantization, the model's parameters (weights and sometimes activations) are converted to a lower-precision numerical format.

How does quantization work?

- Original Format: Models are typically trained using FP32 or FP16 (16-bit floating-point) formats.

- Target Format: During quantization, parameters are converted to formats like INT8 (8-bit integer), FP8, or other lower-precision types.

- Mapping: Quantization involves creating a mapping between the range of values in the original format (e.g., FP32) and the range of values in the target format (e.g., INT8). This mapping defines how to represent the original values using the limited range of the target format and can be linear or non-linear.

- Rounding: Based on the mapping, the original values are "rounded" to the nearest representable value in the target format.

- Information Loss: This rounding process inevitably leads to some loss of information, which can result in a decrease in the model's accuracy. The challenge in quantization lies in minimizing this loss of precision.

Example (INT8 Quantization):

- FP32: One number occupies 4 bytes.

- INT8: One number occupies 1 byte.

If we quantize a 175 billion parameter model from FP32 to INT8, the model size shrinks from 700 GB to 175 GB! This is a significant saving, making it possible to run the model on smaller, less expensive GPUs (albeit often with a slight decrease in performance).

Quantization Methods:

- Post-Training Quantization (PTQ): Quantization is performed after the model has been fully trained. This is the simplest method but may lead to a greater loss in accuracy.

- Quantization-Aware Training (QAT): Quantization operations are simulated or incorporated into the training process itself. The model learns to compensate for the precision loss caused by quantization. This typically yields better accuracy than PTQ but requires more time and computational resources for training.

Summary

Quantization is an essential technique for efficiently running large-scale LLMs. It allows for a significant reduction in model size and memory requirements, making these powerful models accessible to a wider range of users and hardware. However, quantization involves a trade-off with accuracy, so selecting the appropriate quantization method and numerical format for the specific task is crucial. Hardware support (e.g., efficient INT8 operations on GPUs) is key for running quantized models quickly and effectively. The evolution of numerical formats (FP32, FP16, BF16, INT8, FP8) and their hardware support is directly linked to quantization, collectively enabling the creation and deployment of increasingly large and complex LLMs.