Nvidia Unveils Blackwell: The Next-Generation AI Superchip Platform

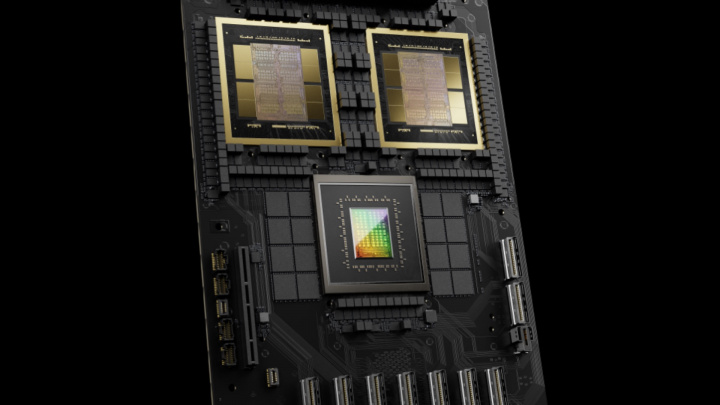

Nvidia, a leader in accelerated computing and AI, has unveiled its highly anticipated next-generation platform built around the powerful Blackwell GPU. Announced at the company's GTC 2024 conference, this new architecture, named after mathematician David Blackwell, succeeds the influential Hopper generation (H100/H200). Significantly, Blackwell represents Nvidia's first foray into a chiplet-based design for its data center GPUs, integrating two large GPU dies manufactured using a custom TSMC 4NP process node.

The Blackwell platform empowers companies to build and run unprecedentedly large AI models, potentially scaling to trillions of parameters. This capability is crucial for achieving breakthroughs in complex areas such as data processing, engineering simulation, electronic design automation (EDA), computer-aided drug design, quantum computing, and the rapidly advancing field of generative AI.

The Blackwell architecture introduces several key innovations aimed at tackling the immense computational demands of modern AI:

- It incorporates six transformative technologies specifically designed to boost accelerated computing capabilities for AI, data analytics, and high-performance computing (HPC). These include advancements like a dedicated RAS (Reliability, Availability, Serviceability) Engine for improved system uptime and a Secure AI feature providing confidential computing capabilities.

- Features new Tensor Cores and an enhanced TensorRT-LLM Compiler. Together, these can reduce the operational cost and energy consumption for inference on large language models (LLMs) by up to 25 times compared to the previous Hopper generation.

- The platform has already garnered strong support from major industry players, including Amazon Web Services (AWS), Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI, signalling broad and rapid adoption across the cloud and enterprise sectors.

Key Technological Innovations:

- A second-generation Transformer Engine utilizes new micro-tensor scaling support and integrates advanced dynamic range management algorithms with the novel 4-bit floating-point (FP4) precision. This allows for doubling the compute performance and model size capabilities while maintaining high accuracy, essential for training and running massive foundation models.

- Includes NVIDIA's fifth-generation NVLink, a high-speed interconnect technology. It delivers a groundbreaking 1.8 terabytes per second (TB/s) of bidirectional throughput per GPU. This enables seamless, high-bandwidth communication between up to 576 GPUs within large-scale server racks connected via the new NVLink Switch 7.2T system, crucial for training models exceeding trillions of parameters.

- A dedicated Decompression Engine accelerates database queries and data analytics by supporting the latest formats, significantly speeding up data processing tasks which are often bottlenecks in AI workflows.

Applying the Blackwell Platform:

- The DGX SuperPOD™ powered by NVIDIA GB200 Grace Blackwell Superchips represents the next leap in AI supercomputing infrastructure. It's specifically architected for processing trillion-parameter generative AI models, offering massive performance, scalability up to tens of thousands of GPUs, and high energy efficiency in a liquid-cooled rack-scale design.

- The GB200 Grace Blackwell Superchip itself connects two Blackwell B200 GPUs to an NVIDIA Grace CPU over an ultra-fast 900GB/s chip-to-chip link. This tightly coupled configuration promises up to a 30x performance increase for LLM inference workloads compared to the already powerful H100 GPU, along with substantial energy efficiency improvements.

The NVIDIA Blackwell platform marks a significant advancement in computing technology, offering unprecedented efficiency, performance, and scalability tailored for the most demanding AI applications. Its endorsement by leading technology companies, its innovative chiplet architecture built on advanced process technology, and its potential to revolutionize various industries underscore its importance in the ongoing evolution of computation and artificial intelligence.